Usability testing is a crucial process in product development that helps identify and resolve user experience issues. By observing real users interacting with a product or interface, designers and developers gain valuable insights into its strengths and weaknesses. This method of evaluation allows teams to make data-driven decisions to enhance user satisfaction and overall product quality.

Effective usability testing involves careful planning, execution, and analysis. Testers select appropriate participants, design realistic tasks, and collect both quantitative and qualitative data. The results often reveal unexpected problems and user behaviors that may not have been apparent during the initial design phase.

As technology evolves, so do usability testing techniques. Remote testing tools now enable researchers to gather feedback from users across different locations and time zones, expanding the scope and diversity of user input. This adaptation has made usability testing more accessible and cost-effective for businesses of all sizes.

Key Takeaways

- Usability testing identifies user experience issues through direct observation and feedback

- Careful planning and analysis are essential for effective usability testing

- Remote testing tools have expanded the reach and efficiency of usability studies

Understanding Usability Testing

Usability testing evaluates how well users can interact with a product or interface. It provides valuable insights for improving user experience and creating more user-friendly designs.

Definition and Purpose

Usability testing is a method of evaluating a product or service by observing real users as they interact with it. The primary purpose is to identify usability issues, collect qualitative and quantitative data, and determine user satisfaction with the product.

This process helps UX researchers and designers create more intuitive and efficient user interfaces. By observing users complete tasks, teams can pinpoint areas of confusion or frustration and make necessary improvements.

Usability testing also measures the effectiveness, efficiency, and satisfaction of a product or service. It ensures that designs meet user needs and expectations, ultimately leading to better user experiences.

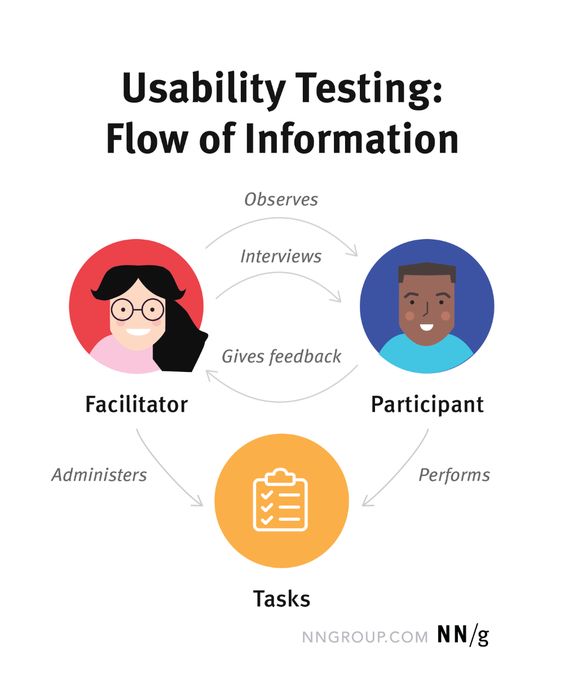

Roles in Usability Testing

Several key roles contribute to successful usability testing sessions. The moderator or facilitator guides participants through the testing process, asking questions and providing instructions as needed.

Participants are representative users who complete tasks and provide feedback on their experience. Their actions and insights form the basis for usability improvements.

UX researchers analyze the data collected during testing sessions. They identify patterns, draw conclusions, and make recommendations for design changes.

Designers and developers often observe sessions to gain first-hand insights into user behavior. This direct exposure helps them create more user-centered solutions.

Types of Usability Testing

Moderated usability testing involves a facilitator guiding participants through tasks and asking follow-up questions. This approach allows for real-time probing and clarification.

Unmoderated usability testing lets participants complete tasks independently, often using specialized software. This method can reach a larger audience and is typically more cost-effective.

Remote usability testing can be conducted moderated or unmoderated, allowing participants to engage from their own locations. This approach offers flexibility and broader geographic reach.

In-person usability testing takes place in a controlled environment, such as a lab. It provides opportunities for direct observation and immediate feedback.

Qualitative usability testing focuses on collecting descriptive data about user experiences. Quantitative usability testing measures specific metrics like task completion time or error rates.

Planning and Preparation

Effective usability testing requires thorough planning and preparation. Key elements include developing a comprehensive testing plan, selecting suitable participants, and preparing necessary materials.

Developing a Testing Plan

A well-structured testing plan outlines the goals, methods, and scope of the usability test. It defines specific tasks and scenarios that participants will engage with during the session. The plan should align with the project’s objectives and focus on evaluating critical aspects of the design.

Identify key metrics to measure, such as task completion rates, time on task, and user satisfaction. Decide on appropriate usability testing methods, such as think-aloud protocols or eye-tracking, based on the project’s needs.

Consider the testing environment and any technical requirements for conducting the sessions. Allocate sufficient time for each participant to complete tasks and provide feedback.

Selecting Participants

Choose participants who represent the target user group for the product or service being tested. Create user personas to guide the selection process and ensure diversity among testers.

Recruit a mix of users with varying levels of experience and familiarity with similar products. Aim for a sample size that balances statistical significance with resource constraints.

Screen potential participants to ensure they meet the desired criteria. Consider factors such as age, gender, technical proficiency, and relevant domain knowledge.

Usability Testing Materials

Prepare all necessary materials before conducting the test sessions. This includes prototypes or working versions of the design to be evaluated.

Develop clear task instructions and scenarios for participants to follow. Create questionnaires or surveys to gather additional feedback after the testing sessions.

Prepare consent forms and non-disclosure agreements if required. Set up recording equipment for capturing user interactions and verbal feedback.

Organize note-taking templates for observers to document key observations during the sessions. Create a moderator script to ensure consistency across multiple test sessions.

Executing the Test

Usability testing involves carefully observing users as they interact with a product or system. The execution phase is where key insights are gathered through structured tasks and observations.

Moderated vs. Unmoderated Approaches

Moderated testing involves a researcher guiding participants through tasks and asking follow-up questions. This approach allows for real-time clarification and probing into user behavior. The moderator can adjust tasks on the fly based on user responses.

Unmoderated testing occurs without direct oversight. Participants complete tasks independently, often using screen recording software to capture their actions. This method enables testing with larger sample sizes and participants in diverse locations.

Both approaches have merits. Moderated tests provide richer qualitative data, while unmoderated tests offer broader quantitative insights. The choice depends on research goals, budget, and timelines.

Remote and In-Person Differences

Remote testing allows researchers to observe users in their natural environments. Participants use their own devices, providing authentic context. Screen sharing and video calls facilitate observation.

In-person testing occurs in controlled settings like usability labs or conference rooms. This setup offers more control over the testing environment and equipment. Researchers can closely monitor facial expressions and body language.

Remote tests are more flexible and cost-effective. In-person tests enable deeper insights into non-verbal cues and physical interactions with products.

User Actions and Observations

During test execution, researchers focus on user actions and behaviors. They note task completion rates, time spent on tasks, and navigation paths. Error frequency and recovery attempts are also recorded.

Observations extend beyond quantitative metrics. Researchers listen for verbal comments, frustrations, or moments of delight. Body language and facial expressions provide additional context.

User interactions with specific interface elements are closely monitored. This includes button clicks, scrolling behavior, and menu selections. Such detailed observations help identify usability issues and areas for improvement.

Data Collection and Analysis

Usability testing generates valuable insights through both qualitative and quantitative methods. Researchers gather participant feedback, observe behaviors, and measure key metrics to identify usability issues and improvement opportunities.

Qualitative Data

Qualitative data provides rich insights into user experiences and perceptions. Researchers collect this information through observation, think-aloud protocols, and interviews.

Participant feedback often reveals usability issues not captured by quantitative metrics alone. Testers note facial expressions, body language, and verbal comments to understand user frustrations and satisfaction.

Think-aloud protocols encourage users to vocalize their thoughts while completing tasks. This method uncovers cognitive processes and decision-making patterns.

Post-test interviews allow researchers to probe deeper into user experiences. Open-ended questions elicit detailed responses about specific interface elements or task flows.

Quantitative Data

Quantitative data provides measurable insights into user performance and behavior. Researchers track metrics such as task completion rates, time on task, and error rates.

Task completion rates indicate the percentage of users who successfully achieve test objectives. This metric helps identify problematic areas in the interface.

Time on task measures efficiency, revealing which tasks users find most challenging. Longer completion times may signal confusing navigation or complex processes.

Error rates highlight specific usability issues within the interface. Tracking these helps prioritize improvements for problematic features or interactions.

User satisfaction scores, often collected through standardized questionnaires, provide numerical data on overall user experience.

Synthesizing Results

Combining qualitative and quantitative data creates a comprehensive understanding of usability issues and user experiences. Researchers analyze patterns across datasets to identify key findings.

Qualitative insights often explain quantitative results. For example, user comments may reveal why a particular task had low completion rates or high error rates.

Researchers create data visualizations to identify trends and correlations. Charts and graphs help communicate findings to stakeholders and design teams.

Prioritization matrices help balance the severity of usability issues against the effort required to fix them. This guides decision-making for product improvements.

The final analysis informs actionable recommendations for enhancing the user interface and overall product usability.

Improving Design Through Feedback

Feedback-driven design refinement leads to more user-friendly products. User input highlights issues and opportunities for enhancement, guiding designers to create solutions that better meet user needs.

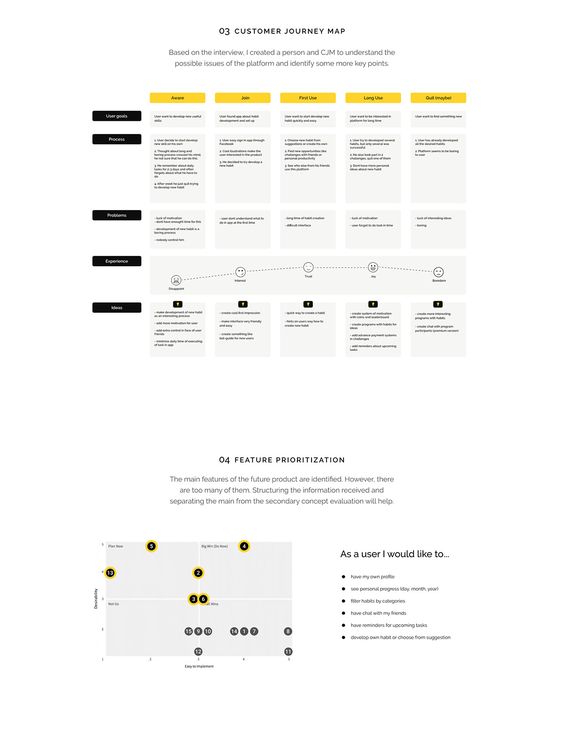

Identifying Pain Points

User feedback reveals frustrations and difficulties in product interactions. Designers analyze this input to pinpoint specific usability problems. Common pain points include confusing navigation, slow load times, and unintuitive interfaces.

Surveys, interviews, and user testing sessions gather valuable insights. These methods uncover both obvious and subtle issues users face. Designers prioritize addressing the most critical pain points first.

Heat maps and user journey mapping visualize problem areas. These tools help designers understand where users struggle or abandon tasks. By identifying pain points, designers can focus their efforts on the most impactful improvements.

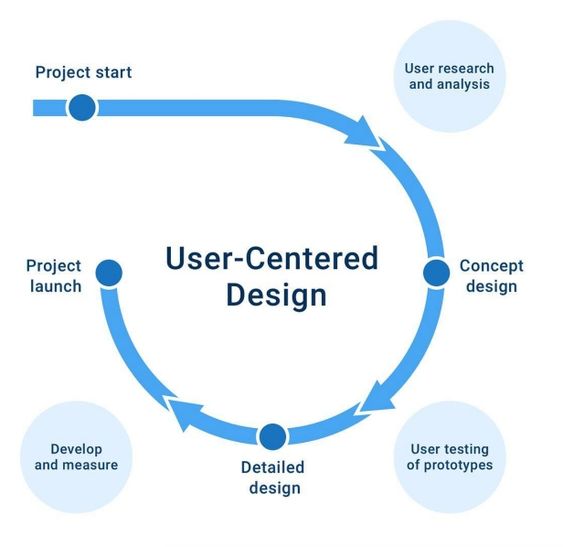

Iterative Design Process

The iterative design process involves continuous refinement based on user feedback. Designers create prototypes, test with users, and make improvements in cycles. This approach ensures steady progress towards a more user-friendly product.

Each iteration addresses specific issues identified in previous rounds. Designers implement changes, then test again to verify improvements. This cyclical process helps catch new issues that may arise from modifications.

Rapid prototyping allows quick testing of multiple solutions. Designers can explore various options before committing to major changes. A/B testing compares different design versions to determine which performs better with users.

Implementing Solutions

Designers translate user feedback into actionable solutions. They prioritize fixes based on impact and feasibility. Quick wins, such as adjusting button placement or clarifying instructions, can be implemented swiftly.

More complex issues may require redesigning entire features or workflows. Designers work closely with developers to ensure technical feasibility of proposed solutions. They balance user needs with technical constraints and business goals.

User testing validates implemented solutions. Designers compare metrics before and after changes to measure improvement. Positive results confirm effective solutions, while unexpected outcomes prompt further refinement.

Remote Usability Testing Tools and Techniques

Remote usability testing enables researchers to gather valuable user insights from participants in different locations. This approach offers flexibility and cost-effectiveness while maintaining the quality of feedback.

Choosing the Right Tools

Screen sharing software is essential for remote usability testing. Tools like Zoom, Skype, or GoToMeeting allow researchers to observe participants’ actions in real-time. User testing platforms such as UserTesting or TryMyUI provide specialized features for remote studies.

Recording software captures participants’ screens and audio for later analysis. Platforms like Lookback and UserZoom offer integrated recording capabilities. These tools often include features for note-taking and timestamp marking.

Task management tools help organize test scenarios and track participant progress. Trello or Asana can be used to create task lists and share them with remote participants.

Techniques for Remote Testing

Moderated testing involves real-time interaction between researchers and participants. This method allows for follow-up questions and clarifications during the session. It’s ideal for complex tasks or when immediate feedback is crucial.

Unmoderated testing lets participants complete tasks independently. This approach is useful for gathering large amounts of data quickly. Researchers can use automated tools to collect metrics like task completion time and success rates.

Surveys and questionnaires complement testing sessions by gathering additional insights. Tools like Google Forms or SurveyMonkey facilitate the creation and distribution of these instruments.

Conducting A/B Testing

A/B testing compares two versions of a design to determine which performs better. Remote A/B testing tools like Optimizely or VWO allow researchers to create and run experiments across different devices and browsers.

Segmentation helps target specific user groups for more focused A/B tests. Researchers can divide participants based on demographics, behavior, or device preferences.

Analytics tools track key performance indicators during A/B tests. Metrics such as conversion rates, click-through rates, and time on page provide quantitative data to support decision-making.

Evaluating Usability with Metrics

Usability metrics provide objective data to assess and improve digital product experiences. Quantitative and qualitative measurements offer insights into effectiveness, efficiency, and user satisfaction.

Usability Metrics

Usability metrics quantify how well users interact with a product. Common metrics include task completion rates, time on task, and error rates. These measurements help identify problems and track improvements over time.

Task completion rates show the percentage of users who successfully finish specific actions. Lower rates may indicate confusing interfaces or workflows that need refinement.

Time on task measures how long users take to complete activities. Longer times often reveal areas where the product could be more efficient or intuitive.

Error rates track mistakes users make while interacting with the product. High error rates point to potential usability issues that require attention.

User Satisfaction and Performance

User satisfaction metrics gauge how people feel about their product experience. Surveys and rating scales collect this feedback.

The System Usability Scale (SUS) is a widely used questionnaire for measuring perceived usability. It provides a score that can be benchmarked against other products.

Performance metrics assess how effectively users accomplish their goals. These include:

- Success rate: Percentage of tasks completed correctly

- Time to complete tasks

- Number of errors made

- Efficiency: Ratio of successful outcomes to time or effort spent

Navigational Efficiency

Navigational efficiency metrics evaluate how easily users move through a digital product. These measurements help optimize information architecture and menu structures.

Click depth tracks how many clicks it takes to reach specific content or features. Lower click depths generally indicate more intuitive navigation.

Time to first click measures how quickly users begin interacting with a product. Shorter times suggest clear and inviting interfaces.

User flow analysis examines common paths taken through the product. This reveals which navigation options are most used and which may be overlooked or confusing.

Usability Testing in Different Contexts

Usability testing adapts to various product types and user needs. The methods and focus areas shift depending on the specific context, target audience, and industry requirements.

Testing for Web and Digital Products

Web and digital product usability testing focuses on user interface elements and navigation flows. Testers evaluate how easily users can complete tasks like finding information, making purchases, or submitting forms.

Common techniques include:

- A/B testing of different layouts or features

- Heat mapping to track user attention and clicks

- User journey mapping to identify pain points

Mobile-specific testing addresses touchscreen interactions, app performance, and responsiveness across devices. Testers often use screen recording software to capture user behavior and gather feedback.

Testing for Accessibility

Accessibility testing ensures digital products are usable by people with disabilities. This involves checking compliance with guidelines like WCAG (Web Content Accessibility Guidelines).

Key areas of focus include:

- Screen reader compatibility

- Keyboard navigation

- Color contrast ratios

- Alt text for images

Testers often use specialized tools to simulate different user conditions. They may also recruit participants with various disabilities to provide authentic feedback on the user experience.

Industry-Specific Usability Testing

Different industries have unique usability requirements based on their products and user base. Healthcare applications, for example, need intuitive interfaces for medical professionals and patients alike.

Financial services focus on secure transactions and clear presentation of complex information. E-learning platforms prioritize engagement and knowledge retention.

Testers in these fields must understand industry regulations and best practices. They often collaborate with subject matter experts to design relevant test scenarios.

Usability testing in specialized industries may involve:

- Task analysis for critical workflows

- Compliance checks with industry standards

- Evaluation of domain-specific terminology and layouts

Best Practices and Common Pitfalls

Effective usability testing requires careful planning and execution. Implementing best practices while avoiding common mistakes is crucial for obtaining valuable insights.

Usability Testing Best Practices

Clearly define test objectives before starting. Recruit participants who match your target user demographic. Create realistic tasks that align with typical user goals.

Prepare a detailed test script to ensure consistency across sessions. Use a mix of quantitative and qualitative measures to gather comprehensive data.

Conduct pilot tests to refine your methodology. Encourage participants to think aloud during the test. Take notes on both verbal and non-verbal feedback.

Analyze results promptly and share findings with the design team. Prioritize issues based on severity and frequency. Develop actionable recommendations for improvements.

Avoiding Common Errors in Usability Testing

Avoid leading questions that bias participant responses. Don’t intervene during tasks unless absolutely necessary. Refrain from defending the product or explaining design decisions.

Beware of the observer effect influencing participant behavior. Don’t rely solely on user preferences; focus on actual performance metrics.

Avoid testing with too few participants, which can lead to unreliable results. Don’t overlook the importance of proper task selection and wording.

Be cautious of over-generalizing findings from a limited sample size. Avoid rushing through analysis and missing important insights.

Remember that usability testing is iterative. Don’t expect to solve all issues in a single round of testing.

Frequently Asked Questions

Usability testing employs various methods and tools to evaluate software products. It plays a crucial role in improving user experiences and differs from other types of testing.

What are the different methods commonly used in usability testing?

Common usability testing methods include think-aloud protocols, eye tracking, and A/B testing. Think-aloud protocols involve participants verbalizing their thoughts as they use a product. Eye tracking measures where users look on a screen.

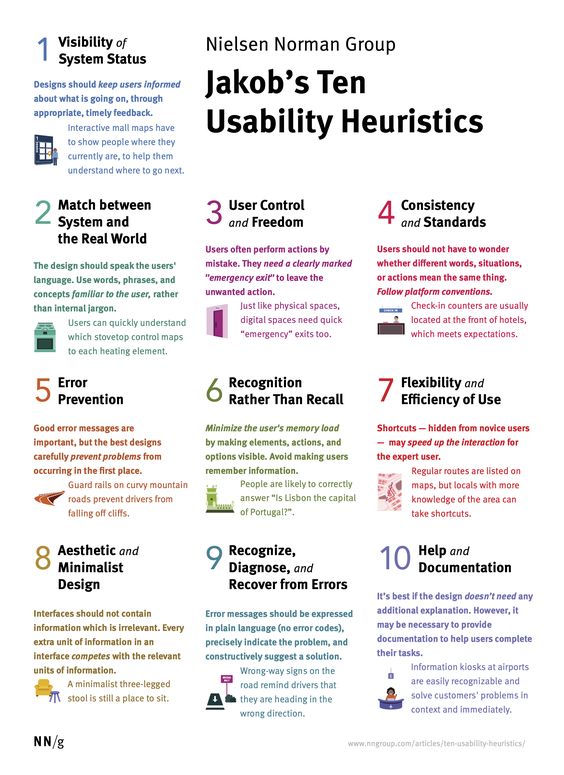

A/B testing compares two versions of a design to see which performs better. Other methods include card sorting, heuristic evaluation, and remote usability testing.

Can you provide examples where usability testing significantly improved software products?

Dropbox improved its sign-up process after usability testing revealed confusion among new users. The company simplified the steps, resulting in a 16% increase in completion rates.

Google Maps enhanced its interface based on usability test findings. The changes led to faster route selection and improved user satisfaction.

How does usability testing differ from user acceptance testing?

Usability testing focuses on how easy a product is to use, while user acceptance testing verifies if a system meets business requirements. Usability testing occurs earlier in development and involves a smaller group of participants.

User acceptance testing happens near the end of development and includes a larger group of end-users. It aims to confirm that the system functions as intended in real-world scenarios.

Which tools are most effective for conducting usability testing?

Popular usability testing tools include UserTesting, Hotjar, and Lookback. UserTesting allows remote testing with video recordings of user interactions. Hotjar provides heatmaps and session recordings.

Lookback enables live observation of user tests. Other effective tools include Optimal Workshop for card sorting and tree testing, and Maze for rapid prototyping and testing.

What are the main differences between usability testing and user testing?

Usability testing evaluates a product’s ease of use, while user testing is a broader term encompassing various user research methods. Usability testing focuses on specific tasks and measures efficiency, effectiveness, and satisfaction.

User testing may include concept testing, preference testing, and other research techniques. It aims to gather general feedback on user experiences and preferences.

What are the essential steps involved in conducting usability testing in UX design?

The first step is defining test objectives and creating a test plan. Next, recruit participants who match the target user profile. Prepare test materials, including tasks and scenarios.

Conduct the test sessions, observing and recording user interactions. Analyze the data collected and identify usability issues. Finally, report findings and recommend improvements based on the test results.

- 101shares

- Facebook0

- Pinterest101

- Twitter0

- Reddit0